The March EN:Insights Forum examined how exposure to automation and AI will impact employment in the U.S., as well as globally. In addition to SHRM’s new research on Jobs at Risk: U.S. Employment in the New Age of Automation, which will be featured in the Spring issue of the People + Strategy journal, SHRM Executive Network (EN) members heard from Peter Cappelli, George W. Taylor professor of management and director of the Center for Human Resources at The Wharton School.

“Jobs that are already highly automated are at a greater risk of being replaced by automation in the future,” Justin Ladner, senior labor economist at SHRM, told forum attendees. “About 1 in 8 workers, about 12.6% of the current U.S. employment, is a group that faces this level of exposure to automation displacement: high or very high exposure.”

The findings explore the distribution of overall automation displacement risk across the U.S. SHRM researchers analyzed federal data, including figures from the U.S. Bureau of Labor Statistics’ (BLS’) 2023 Occupational Employment and Wage Statistics, to determine how automation displacement risk correlates with employment.

Here are four critical insights from the research.

Research Insight 1: SHRM is pursuing a new approach to estimating automation displacement risk that allows this risk to vary within individual occupations. This feature is important, as the decision to automate the same occupation will plausibly vary across organizations according to a wide variety of factors.

Automation displacement risk can vary within an occupation, as not all jobs in a given occupation face the same level of risk.

Research Insight 2: An estimated 12.6% of current U.S. employment (19.2 million jobs) faces high or very high risk of automation displacement.

Distribution of overall automation displacement risk:

- Negligible: 38.9%

- Slight: 23.9%

- Moderate: 24.6%

- High: 10.5%

- Very High: 2.1%

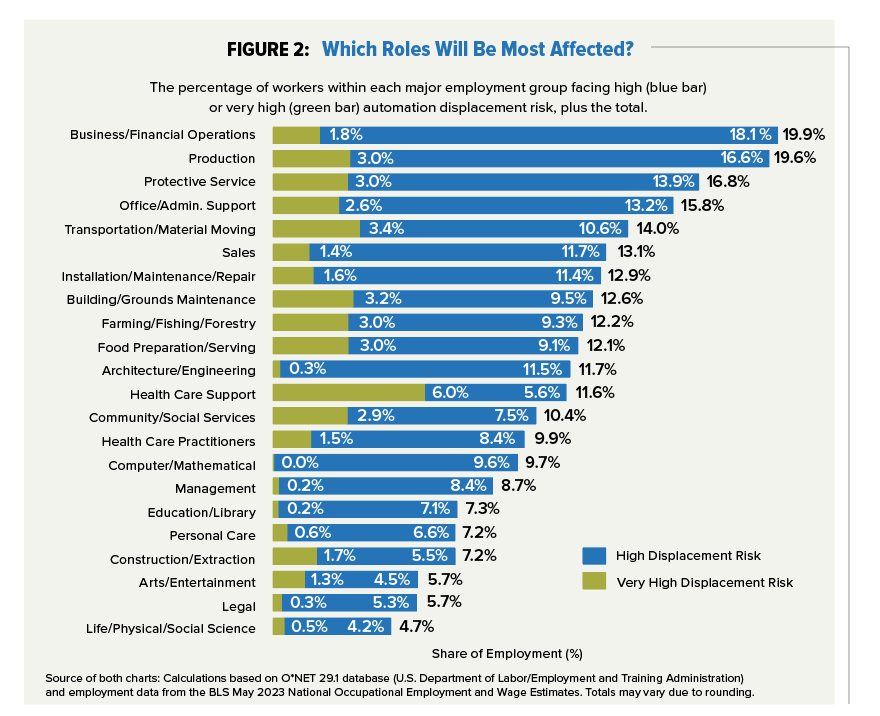

“One big thing is that this risk that we estimate varies a lot by occupational group,” Ladner said. “You can see business/financial operations ranks the highest in terms of overall exposure to high or very high automation displacement risk.”

Research Insight 3: Automation displacement risk varies by occupation, with the greatest risk often being concentrated in roles that involved highly routinized tasks.

“You can see business and financial operations ranks the highest in terms of overall exposure to high or very high automation displacement risk,” Ladner said. One the other hand, “physical and social sciences, those are basically research-type jobs we estimate have very low exposure.”

Research Insight 4: Automation displacement will not just depend on technological advancements/ It will also depend on nontechnical barriers.

“The research estimates from the report ignore nontechnical barriers to automation that may be very influential in the years ahead,” said Ladner.

For example, airline pilots and air traffic controllers already have jobs that are highly automated, and the technological leap required to move toward full automation in these occupations may be modest. However, in practice, factors such as legal/regulatory barriers and customer preferences make complete automation displacement in these occupations very unlikely in the near future.

Big-Picture Takeaways for HR Leaders

One central theme of this research to date is that HR leaders must understand the specific needs of their organization. Some organizations will find that making major AI/automation investments is an optimal path, whereas others will reach the opposite conclusion. Ultimately, these choices depend on many factors, including (but not limited to) the occupational composition of an organization’s workforce, its scale of operations, its capacity for capital investment, and the preferences of its clients.

Peter Cappelli: The Impact of AI: Are Large Language Models Helping or Hurting?

Peter Cappelli: The Impact of AI: Are Large Language Models Helping or Hurting?

“If you look at your cellphone when you’ve been trying to fill in a word and it gives you the word it thinks you want to say, that’s the large language model (LLM) making a prediction based on what people have said before,” Cappelli told March’s EN:Insights Forum. “This is really on my mind because I’ve just caught a bunch of my students cheating with ChatGPT. So it turns out the thing it is best at is writing college term papers.”

Peter Cappelli is the George W. Taylor professor of management at The Wharton School and director of Wharton’s Center for Human Resources. He has degrees in industrial relations from Cornell University and in labor economics from Oxford University, where he was a Fulbright Scholar. Cappelli co-wrote the book Managing the Older Worker (Harvard Business Review Press, 2010) and has done research examining changes in employment in the U.S. and practical implications for talent management, employee development, and retention.

Here are a few excerpts from Cappelli’s comments during the March 2025 EN:Insights Forum.

Peter, to kick things off, were there any of Justin’s research findings that really resonated with you?

What we’re trying to do, and Justin and his colleagues are trying to do, is a very difficult thing, and that is making forecasts of the future. We’ve struggled for a very long time at the economic level trying to do this. We didn’t predict the Great Recession very well. We didn’t predict the pandemic very well. We didn’t predict what the Trump administration was going to do with respect to things like DEI very well. And because of that, all our forecasts are just blown up.

So I guess my first point is to tell us not to fixate on forecasts of the future very well because they’re hard to make. They’re often wrong, not because we didn’t try, just because the nature of reality is so uncertain, but it’s also costly when you make mistakes. And that means having a forecast, acting on it, making investments, and then if it’s wrong, often is worse than not taking action at all and just waiting to see how things play out. And that’s my punchline — is wait to see how things play out. ... The question is not what is technically possible. The question is what is practical.

What practical and economic factors should companies consider before adopting automation technologies?

Well, the first thing to recognize is that this is not easy to do and it’s going to take a lot of time and energy. You can’t do it yourself. The big thing we don’t think about is how much data you need in order to make this work. So you need somebody who can walk you through: What is this actually going to require? I think the biggest problem we had is that our chief executives often think this is simple to do. ChatGPT will already write term papers — why can’t it just automate this for us?

So part of what I think HR people need is the ability to defend themself against uninformed executives at the next level up who think this is simple and easy to do. What you might need is a little training on how to use prompts better, but that’s like an hour worth of training really. It’s not hard, and if you were ever going to hire people, the people you should hire are not data scientists. You should hire librarians. Let me just tell you why you want librarians. Because they already know how to ask questions for searches. They also know what data is good data and what data is bad data, because you have to train your large language models on something. If, for example, you want your chatbot to give good answers to customers, what data source are you going to use to train that? They’re the ones that can help you figure out: Use this data, not this data. And that’s good news because believe me, librarians are cheap. Data scientists are expensive. So go get librarians.

How can HR professionals effectively advocate and have productive conversations with their CEOs or their CFOs who are eager to save costs without dismissing their concerns?

Well, I think you have to give them a sense of what this costs. But you have to know enough about what’s involved just to be able to walk them through because my sense is they don’t know what’s involved in automating this stuff. They just think you can bring in this open source ChatGPT, it’s free and it will just take it over for you.

But it’s not available to us to do this kind of stuff yet. That’s the place to start. It’s just how expensive and time-consuming it is to do this kind of work.

Are there any real-world success stories? Any great examples of companies integrating automation while maintaining positive customer experience, employee satisfaction? And what lessons can we learn from those approaches?

The answer is no, not a lot of them, unfortunately, just a lot of claims. But an example of where you could see, here’s what we did and how we did, those are hard to come by. You hear lots of claims and the claims are, you know, we’re automating this or that. And if you ask a lot of executives, and when I ask HR people, a lot of them say they’re using ChatGPT, they’re using large language models. If you drill down on how they are using it, they’re mainly just using it as a search engine the same way you do a Google search. Like they say, it’s giving me job description information about what a job description should look like. Google search would do that for you too. Pilot/Copilot maybe does it fast. That’s fine. But that’s not eliminating jobs, you know? And so I think that’s sort of where we have to start thinking here about defending yourself.

It’s not just the ability to use ChatGPT, is it? Where it’s most useful is in adding things that we’re not now doing. This is good news. So where is it really useful? It will allow us to do things that we’re not doing now because we don’t have time and resources. Like we should never go with our gut again. Because you can go to one of these ChatGPT-like large language models and pretty quickly learn what we already know about something, even if you’re not an expert on it. So that’s something that helps a lot. It’s not going to eliminate anybody’s job because it’s adding a task that we haven’t done before.

Are we gaining jobs, losing jobs, or are titles just changing?

I think the good news is that these tools allow us to do things that we have not done before, and that’s good. The thing that it will be best at is allowing us to look at our own internal data where we’re already awash with data right now. What we’re going to discover if you look, say, for example, at computer programming, as Justin said earlier, we’re probably not going to lose many jobs there. There are large language models to help people write code. But it turns out that studies of computer programmers show they only spent 30% of their time writing code. They spend most of their time negotiating with stakeholders to say, “What do you actually need here? Here’s what I think we can do for you.”

Even if ChatGPT would do all the programming, you still have 70% of the work that’s got to be done by somebody else, right? So I think the short answer is that it’s not going to cut jobs. It will, however, take on new tasks that we’re not doing now.

How can HR leaders stay informed on the latest advancements in AI and automation without getting caught up in this hype cycle?

It is a really important question and a hard one to answer because there’s so many players that have an economic stake in you believing in the hype cycle. And they will sell you solutions and they might be things you don’t need. And it’s quite likely they are far overpromising what they can do. My advice would be to wait until you actually can start seeing examples. And when I say seeing examples, that means where they can actually walk you through what was done. You probably have to get this from one of your fellow HR people who has tried to do this someplace, and they can walk you through “Here was our experience.”

If you think about it, the hype cycle has been going for about a year and a half now and it’s still really hard to find examples where you can say, here’s a job where it absolutely changed. And some of the ones where they did change, you have to hunt through the news to get the story. Do you know the Air Canada story? This is where they basically put an LLM, I think it was ChatGPT, behind their chatbots to answer customer questions. And they did a much better job of making customers happy because they were giving customers solutions that Air Canada didn’t want. Sure, we’ll give you this discount, we’ll allow you to rewrite your flight to a different place and we’ll give you a refund and all this kind of stuff. And Air Canada tried to shut it down and tried to take all those things back. The customers sued, Air Canada claimed ChatGPT was autonomous, and they ultimately lost. You know, so the stories that don’t work very well, you’re not going to hear those from the vendors. So you really have to rely on your network of HR people and SHRM to see whether they can find you stories where people will walk you through the good and the bad here.

One thing is training on the problems with using large language models and the risks. And a lot of that has to do with accessing data. If you’re going to try to use it on internal company data, there are a lot of problems with that. Some of it is leaking the data outside, depending whether you have firewalls up on your large language model or not. Some of it is data pollution — somebody in your company puts in some data they want to use and it turns out the data is not very good, but now it’s in there for everybody’s analysis so you need training on using it. I would think seriously in the short term here about restricting the use of these tools for business purposes within the organization, maybe to some office that will run this analysis for you if you need them done. But rather than having 1,000 employees play around with this stuff and make decisions, produce reports. You know, I think the other thing you want them to do is anybody who’s producing any document that uses one of these large language models has to certify first that they’ve used it and that they have checked it.

Because it’s not just [AI] hallucinations that are the problem. It is wrong data, which will give wrong answers. So they need to check everything that’s going to be used in any serious way. So I think that’s where the training comes in, that’s where the rules come in. And if you don’t have those things in place, I would do them right now.

So am I hearing you correctly that it’s more likely that AI will make a mistake than a human will make a mistake?

I think it is more likely that ChatGPT and the large language models will make a massive mistake, and I think it’s more likely that humans will make small mistakes. But that’s why you need everything consequential that is done by a large language model to be checked by a human who knows what the story is roughly like, knows the field enough.

I want to turn to the ethical side of using AI. What are the ethical issues that we need to consider?

The HR specific issues are that large language models and machine learning, all those things are really about optimization questions. They’re not about fairness issues. For example, let’s say I work for Mo and I applied for a promotion and I didn’t get it, right. What would happen now is I would go to Mo and I would complain and Mo, if he was a good supervisor, and I’m sure he would be, he would say, “Let’s look at your case and let me tell you what you could improve next time. And you know, we will try to help you. I can’t promise anything, but we will try to help you, right?”

Let’s say instead we have turned that process over of making recommendations for promotion over to a large language model — or more accurately, a machine learning model — that’s predicting who will succeed in a promotion. So I go to Mo and I say, “Mo, I didn’t get this promotion. Why not?” And Mo hands me the phone number for the programmer who created the large language model. It turns out that the programmer can’t tell you because there’s a million things in those models. Theyre all, you know, nonlinear combinations of things. They won’t even know the answer to that, right? And what can Mo do for me now? And the answer is nothing. So one of the things we know about supervisors in particular is the way they gain influence with employees is the ability to help employees and the ability to kind of reward them in various ways. We take that authority away from Mo. We take also the ability for him to even explain to me why I didn’t get the promotion. Maybe we get a better outcome in that sense, you know, in that maybe it’s going to predict better who will succeed in this new job. But we’ve undercut what the supervisors do in a big way, and that’s a problem.

These are some of the human aspects of dealing with optimization to make these decisions. We really have to think about these quite hard because there’s an entire industry pushing why this is a smart thing to do. They might very well make better predictions on things, particularly like who gets hired, who gets promoted. But then we have to deal with the human dimensions and we haven’t given those much thought ... the kind of exchange, you know, that you help me boss and then I’ll help you later on, goes away when the boss can’t help me as much because he’s not making as many decisions, right? That is an issue.

So what advice would you have for HR professionals who are feeling pressure from leadership to rapidly implement AI and automation but are concerned about the potential unintended consequences that you brought up?

Would you give a promotion to somebody who you personally knew and liked rather than somebody else who appeared to be identical but had a higher score on the predictive algorithm? So far, virtually everybody says I would give it to the person I know and liked. If that’s the case, I would say to my top executives, we shouldn’t bother building these models because people won’t use them. They don’t think they’re fair, they don’t like them, they’re not going to use them. We could spend a lot of time and money building these. They’ll be cool, but people won’t use them.

Do you see any future trends in automation that executives should be aware of as they go forth with their strategic planning?

I don’t know that we’ve got really any evidence of trends now. I would not rely on the people who are building the tools and selling them to you to tell you what those tools can practically do. By the way, right now they’re not telling you what’s practical. They’re just telling you what’s conceptually possible, right? So I guess I would first of all be very nervous about that and be making sure I’m not just listening to them.

If you want to talk to them, ask them for evidence. If the evidence doesn’t include stuff like what is this cost? How long did it take? Just say, you know, when you get that evidence, show me. Come back when you get all that stuff because my sense right now is they’re not telling anybody any of that stuff. Make them show you real evidence of stuff, and maybe that’s the best thing we can do.

If you could leave the audience with one takeaway that you’ve learned along your journey, what would that be, Peter?

I would say, don’t panic and don’t worry yet. Wait until you can actually see stuff happening. There’s no benefit to be on the bleeding edge of technology, right? Let somebody else try all these things that sound exotic and cool, and then see how they work before you jump in. Why not wait and just see what’s happening?

Was this resource helpful?