The Rise of AI Regulations and Corporate Responsibility

Business leaders are discussing the responsible use of artificial intelligence. Soon they may need to walk the talk.

As organizations have increasingly adopted artificial intelligence, especially in their talent management and recruitment efforts, there has been more discussion among business leaders about how to make sure they use AI responsibly. Soon, they may have to walk the talk. A growing wave of regulations could force organizations developing and using AI to implement responsible-use policies.

Upsides of AI include simplified decision-making. In machine learning, for example, a computer ingests huge amounts of data and, based on the patterns it sees, creates certain rules that enable it to make automatic decisions.

Without proper development and use, however, AI systems can be biased—either because of bias in the data itself or in how the algorithm processes the data—and that may result in unintended actions. For example, talent acquisition software may eliminate certain candidates in discriminatory ways if no safeguards are in place.

To guard against such outcomes, experts say, executives who don't already have a process for regularly scrutinizing their organizations' use of AI need to create one.

AI Is Everywhere

AI is being rapidly and broadly adopted by organizations worldwide—often without adequate safeguards. Survey results published in 2022 by NewVantage Partners, a business management consulting firm specializing in data analytics and AI, found that nearly 92 percent of executives said their organizations were increasing investments in data and AI systems. Some 26 percent of companies already have AI systems in widespread production, according to the survey. Meanwhile, less than half of executives (44 percent) said their organizations have well-established policies and practices to support data responsibility and AI ethics.

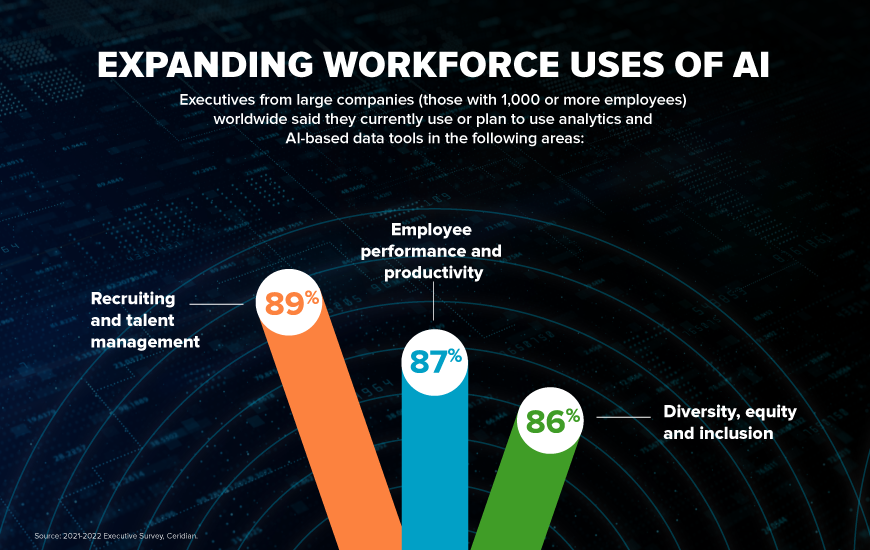

HR consulting company Enspira estimates that more than 50 percent of companies in the United States either have or plan to implement HR platforms that use AI, according to Aileen Baxter, vice president and head of Enspira Insights, a division of the company focused on research. Organizations are increasingly using AI-based tools in areas such as recruiting, employee performance and diversity. Baxter estimates that only 20 percent of these companies are aware that regulations are likely on the way.

"Given the legal scrutiny that is coming, companies need to make sure they are responsible in how they use AI, especially around diversity in hiring," says Baxter, whose company recently published a white paper on AI and bias in the hiring process.

The Regulatory Landscape for AI Tightens

Pressure for oversight is coming from many directions. Over the last year, regulators, including international bodies and U.S. city governments, have begun to focus on the potential for AI to cause harm. (See sidebar on AI regulation.)

Recently, lawmakers introduced legislation in both chambers of the U.S. Congress that would require organizations using AI to perform impact assessments of AI-enabled decision processes, among other requirements. But the regulatory winds really started to blow last spring, when the European Commission, the executive branch of the EU, introduced a proposal to regulate AI using a system similar to the EU's General Data Protection Regulation (GDPR), a sweeping data protection and consumer privacy law. The AI regulation proposes fines for noncompliance ranging from 2 percent to 6 percent of a company's annual revenue.

The New York City Council in December passed a law regulating AI tools used in hiring. That statute carries fines of $500 to $1,500 for violations.

Business groups have responded with their own proposals and recommendations on the responsible use of AI. In January, the Business Roundtable, which represents over 230 CEOs, published a "Roadmap for Responsible Artificial Intelligence" and accompanying policy recommendations.

That same month, the Data & Trust Alliance, a consortium of more than 20 large companies, published its "Algorithmic Bias Safeguards for Workforce," which is designed to help HR teams evaluate vendors on their ability to detect, mitigate and monitor algorithmic bias in workforce decisions.

"This is the first time so many CEOs not only acknowledged the need for AI, but also talked about what companies should do about it," says Will Anderson, vice president of technology and innovation at the Business Roundtable.

Navigating the Complexities of AI Usage

However, there are so many gray areas when it comes to AI that it's hard for companies to implement responsible use on a practical level. What constitutes responsible use, for example? Even the definition of AI itself differs depending on whom you talk to

Most importantly, many organizations may not even realize they are using AI.

"A lot of companies don't know what they don't know—they don't necessarily understand when they are bringing AI into the organization," says Merve Hickok, SHRM-SCP, an expert on AI ethics. She has worked with national and international organizations on AI governance methods and is founder of AIethicist.org, a website repository of research materials on AI ethics and the impact of AI on individuals and society. "Some have an inkling about potential bias and some of the risks but may not know what questions to ask or where to begin."

Thus, the first step for C-suite executives should be to identify whether their organizations use AI and, if so, where. Some uses are easy to spot. Vendors of specific types of AI-enabled software often call attention to their AI. It's part of their branding, says Ilana Golbin, global responsible AI leader at professional services company PwC.

However, AI can also sneak into your organization. A procurement team may not realize that an HR software package "has some AI capabilities buried deep inside the product," says Anand Rao, global AI lead at PwC. In large enterprisewide platforms, updates from a vendor could add AI functions without much fanfare. They might add a feature that automates e-mail management, for example. Another possible entry point is through cloud service providers, Golbin says. "It's very easy to get democratized tools from cloud providers for building machine-learning systems," she says.

Building Robust AI Governance Frameworks

Once they know what AI their organization is using, executives need to put governance and compliance checks and balances into place. All the responsible AI use principles and guidance from various groups recommend similar types of best practices. Most of them advise, for example, getting documentation from vendors showing how they developed their AI and what data they used. The recommendations also point out that organizations need to continually monitor AI systems because the decisions coming out of AI can change as the data fed into the systems change, which can introduce unintended bias.

"You need to do periodic audits and be able to explain how algorithmic decisions are made," says Ryan McKenney, a member of the cyber, privacy and data innovation team at global law firm Orrick Herrington & Sutcliffe LLP. "Whether or not that's currently required by law, that's just good practice."

Beyond the tactical, executives need to have a risk and governance strategy and structure in place—and they need to identify the person responsible for overseeing that function. However, AI touches on so many different aspects—technology, risk, compliance, contracts, data privacy, recruitment and retention, to name a few—that organizations often struggle over where that governance responsibility belongs.

Some companies have formed AI steering committees with representatives from various departments and disciplines, notes Shannon Yavorsky, a partner in Orrick and head of its cyber, privacy and data innovation team. Other organizations put AI governance under the data privacy officer because AI depends so much on the use of data. Some companies have even created a new chief AI ethics officer role, according to Amber Grewal, managing director, partner, and chief recruiting and talent officer at Boston Consulting Group.

Meanwhile, law firms and consultants are offering specialized services for AI governance. Orrick advises clients on formulating AI compliance rules for both the development and the use of AI, Yavorsky says. PwC offers advice on and assessments of AI risk for clients, Rao says, although he's careful not to call them audits. That's another gray area. The term audit has not been defined in the context of AI. "There are no standards for doing an algorithmic audit," Rao says. "Such guidelines don't yet exist."

As AI continues to expand across every industry, it is increasingly relied on by talent acquisition professionals to help solve ongoing talent shortages. However, reports indicate AI is producing biases in hiring and other problematic outcomes at work. In this episode of All Things Work, host Tony Lee is joined by Merve Hickok, founder of AI Ethicist.org, a website focused on the ethically responsible development and governance of AI, to discuss how organizations can ensure they use AI responsibly and ethically.

The Journey of AI Ethics and Compliance

Nevertheless, the New York City law, due to go into effect in January 2023, calls for audits of tools that automatically screen job candidates. Hickok notes that the regulation requires an audit for potential bias but doesn't specify who is supposed to conduct it. "The vendor and the employer [using the software] are going to have to come to an agreement on how this is going to work," she says, noting that it could cause a bit of a dispute between them. "But under the Equal Employment Opportunity Commission and civil rights rules, ultimately it's the employer who is responsible for making sure the systems that it uses don't result in discrimination."

While the law applies only to employers in New York City, it's likely to have a much broader impact. "It's hard for the big companies hiring in the city to separate that from the rest of their hiring systems," Hickok says.

Similarly, the proposed EU regulation will likely impact any organization that operates anywhere in Europe. Indeed, observers predict that AI regulation will follow a path similar to the evolution of data privacy regulations: The EU took the lead internationally with GDPR, while governments in other jurisdictions worldwide followed. In the U.S., a patchwork of local, state and federal rules and proposals have emerged.

Although the exact requirements of laws and regulations aren't yet clear, executives should be getting ahead by ensuring they have the procedures and processes in place to demonstrate that their organizations are using AI responsibly.

"The development, deployment and use of AI necessitates C-suite attention," says the Business Roundtable's Anderson. "This is not a check-the-box exercise but will be a continually evolving journey for companies."

Tam Harbert is a freelance technology and business reporter based in the Washington, D.C., area.

|

Over the past year, regulations and laws have been proposed, and in some cases passed, at the local, federal and international levels regulating the use of artificial intelligence. Here is a timeline of some notable developments. April 2021: The U.S. Federal Trade Commission (FTC) publishes a blog post emphasizing that it already has enforcement powers under three laws that could apply to AI use: 1) Section 5 of the FTC Act, which prohibits unfair or deceptive practices and could include the sale or use of racially biased algorithms; 2) the Fair Credit Reporting Act, which could come into play if an algorithm was used to deny people employment, housing, credit, insurance or other benefits; and 3) the Equal Credit Opportunity Act, which makes it illegal for a company to use a biased algorithm that results in credit discrimination on the basis of race, color, religion, national origin, sex, marital status, age or because a person receives public assistance. April 2021: The European Union publishes a proposal for regulating AI. The proposal would create three categories of AI systems—limited risk, high risk and unacceptable risk—and set different requirements for AI systems depending on their risk level. June 2021: The National Science Foundation and the White House Office of Science and Technology Policy form the National AI Research Resource Task Force. The group will provide recommendations on AI research, including on issues of governance and requirements for security, privacy, civil rights and civil liberties. It is due to submit reports to Congress in May 2022 and November 2022. October 2021: The U.S. Equal Employment Opportunity Commission (EEOC) launches an initiative to ensure that AI used in hiring and other employment decisions complies with federal civil rights laws. According to the commission, the initiative will identify best practices and provide guidance on algorithmic fairness and use of AI in employment decisions. December 2021: District of Columbia Attorney General Karl Racine proposes rules banning "algorithmic discrimination," defined as the use of computer algorithms that make decisions in several areas, including education, employment, credit, health care, insurance and housing. The rules would require companies to document how their algorithms are built, conduct annual audits on their algorithms and disclose to consumers how algorithms are used to make decisions. December 2021: New York City passes a law regulating the use of AI tools in the workplace. The law, which takes effect in January 2023, will prevent employers in New York City from using automated employment decision tools to screen job candidates unless the technology has been audited for bias. Companies also will be required to notify employees or candidates if the tool was used to make hiring decisions. February 2022: In both chambers of the U.S. Congress, the Algorithmic Accountability Act of 2022 is introduced. The proposed legislation would direct the FTC to promulgate regulations requiring organizations using AI to perform impact assessments and meet other provisions regarding automated decision-making processes. —T.H.

|

Explore Further

SHRM provides advice and resources to help business leaders better understand the challenges of responsibly using artificial intelligence tools.

Use of AI in the Workplace Raises Legal Concerns

AI tools flooding the HR technology marketplace can bring great value to organizations but also carry significant legal risks if not managed properly. Experts recommend validating new technologies to mitigate risk.

SHRM HR Q&A: Artificial Intelligence

What is artificial intelligence and how is it used in the workplace?

Not All AI Is Really AI

A wide range of technology solutions purport to be driven by artificial intelligence. But are they really? Not everything labeled AI is truly artificial intelligence.

Is Your Applicant Tracking System Hurting Your Recruiting Efforts?

Recent leaps in technology have paid significant dividends in the search for talent, but some AI-based systems may be increasing the worker shortages they were built to address.

EEOC Announces Focus on Use of AI in Hiring

The Equal Employment Opportunity Commission (EEOC) wants to ensure that artificial intelligence and other emerging technologies used in employment decisions comply with federal anti-discrimination laws.

New York City to Require Bias Audits of AI-Type Technology

New York City passed a first-of-its-kind law that will prohibit employers from using AI and algorithm-based technologies for recruiting, hiring or promotion without those tools first being audited for bias.

HR Gets Help Vetting AI Tools

Several companies recently announced they are adopting new measures to vet vendors' artificial intelligence tools in order to mitigate data and algorithmic biases in human resource and workforce decisions.