The Promise and Peril of Artificial Intelligence

Many employers and employees have fallen in love with the capabilities of ChatGPT and other generative AI without considering the potential consequences of their use.

Executives at IQM Software used the most cutting-edge technology to solve an age-old business quandary: how to promote the company without a marketing department or big budget for hiring consultants to create a campaign.

They were especially focused on the dilemma earlier this year when they wanted to publicize their attendance at a trade show and pique interest in their products among eventgoers. The team turned to ChatGPT to solve the problem, and within an hour the artificial intelligence tool generated 12 blurbs that IQM posted on social media. The posts triggered conversations with potential clients, and the project didn't cost anything because the company used the free version of the technology.

"It was insane. I was super, super impressed," says Thibaut Decre, head of strategy at the Rochford, England-based company.

ChatGPT draws on vast data sets and can have humanlike conversations with users that enable it to perform a wide variety of tasks, such as writing computer code, summarizing documents and composing essays. Released last November by San Francisco-based Open AI, it's "the fastest-growing app ever," taking only two months to reach 100 million users, according to Credit Suisse. The Zurich-based bank said it took TikTok nine months and Spotify 55 months to hit the same milestone.

The growth comes despite the technology's potentially serious and dangerous outcomes. It's only as good as the data it has been given, and the information can be incorrect, biased, racist, proprietary or copyrighted. Does IQM know whether the information ChatGPT produced was plagiarized?

"The short answer is I don't," Decre says, adding that he doesn't think the materials were misappropriated because the copy was very specific to IQM. He also notes that there haven't been any complaints.

Newer versions of the technology that require payment can provide data citations, experts say. Still, the problem with ChatGPT and other generative AI products that can produce content is that many employers and employees have fallen in love with the technology's capabilities without considering the potential consequences of its use.

Raising Red Flags

Even tech champions are voicing concern. In March, more than 1,000 industry stalwarts, including Elon Musk, CEO of SpaceX, Tesla and Twitter, and Steve Wozniak, co-founder of Apple, called on AI companies to pause developing new versions of the technology for six months. Musk co-founded Open AI in 2015 and left three years later after clashing with management. He's now building a new company to compete with ChatGPT.

"AI systems with human-competitive intelligence can pose profound risks to society and humanity," said the letter published by The Future of Life Institute, a Cambridge, Mass.-based nonprofit working to steer technology away from extreme risks. AI labs have been "locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one—not even their creators—can understand, predict or reliably control," the letter said.

The Future of Life Institute

"I find this kind of scary," says Peter Cassat, a Washington, D.C.-based partner in law firm Culhane Meadows. "The caution usually comes from the industries that are threatened by the disruption. This [warning] feels different because the caution is actually coming from the innovators themselves."

No AI companies have publicly agreed to the pause, according to a spokesperson for the institute. However, authorities in Italy, citing privacy concerns, banned the technology. Italy is the only Western country to adopt that stance, though a handful of other nations, including Russia, China and Syria, forbid its use.

In April, the U.S. Commerce Department asked the public for comments on potential accountability measures and policies to ensure that AI systems are legal, effective, ethical, safe and otherwise trustworthy. The agency said it would issue a report after examining the responses. And four other federal agencies recently pledged to collaborate closely to prevent discrimination resulting from the use of artificial intelligence and automated decision tools in the workplace.

Potential Problems

ChatGPT has been embroiled in some high-profile troubles. A mayor in Australia, Brian Hood, has threatened to sue Open AI for defamation unless it removes erroneous information in ChatGPT that says he served time in prison for a bribery scandal involving a Reserve Bank of Australia subsidiary, according to published reports. He was the whistleblower in the case, the reports say.

Meanwhile, on three separate occasions, Samsung employees in Korea put confidential company information into ChatGPT while using it to help them do their jobs, according to reports published in April. The employees' actions came soon after Samsung had reversed a ban on using the technology; earlier this month the company reinstated the prohibition, reports said.

The potential for loading proprietary information into the technology where it could be found by others is one reason some companies have banned ChatGPT from their workplaces. It could be a wise decision. Earlier this year a Cyberhaven study of 1.5 million workers over a week found that 3.1 percent of them were pasting confidential information into ChatGPT. That means that hundreds of pieces of propriety data were deposited in the technology, according to the Palo, Alto, Calif.-based data security firm.

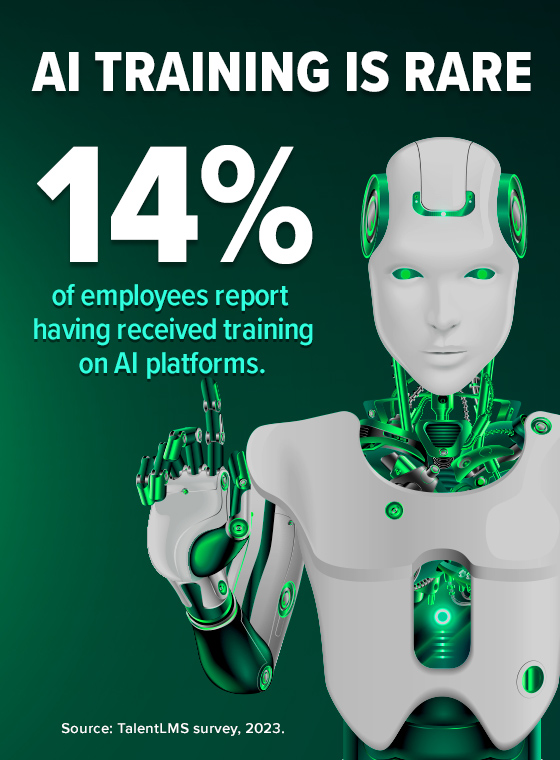

Employees are using the technology despite objections by their employers. Nearly 20 percent of the 700 employees who say they have used ChatGPT at work have done so against their employers' wishes, and 23 percent of them don't want their employers to know they use the tool, according to a study by San Francisco-based TalentLMS, a management system.

Yet, amid all the controversy, the tech industry is forging ahead with developing generative AI products. In March, Open AI launched its most recent version of ChatGPT, and Mountain View, Calif.-based Google introduced Bard, its generative AI product. Microsoft, which owns 49 percent of Open AI, has added ChatGPT to its search engine, Bing, and its suite of business software. Several other companies are offering similar tools.

Job Worries

Despite the potential pitfalls, ChatGPT's allure for employers and workers is understandable. The TalentLMS study found that 61 percent of employees who used the technology said it improved their time management, while 57 percent said it boosted their productivity. Of course, some of those employees may be less enamored of the technology when they realize it could put them out of a job. Nearly 20 percent of jobs could be impacted by ChatGPT, according to a study by Open AI and the University of Pennsylvania. Among those most likely to be affected are writers, translators and tax auditors.

Jurgis Plikaitis

Jurgis Plikaitis, chief executive officer of EpicVIN, says ChatGPT will eventually replace some customer service representatives at the Miami-based provider of vehicle histories. Plikaitis says the chatbot can already answer basic questions, and he's working on training it to reply to more complex inquiries.

"It's not going to replace everyone all at once," Plikaitis says. "I didn't think I would see something like this in my lifetime. Its capabilities are growing very fast, and it's becoming smarter and smarter right in front of my eyes."

ChatGPT has already helped the company with its marketing efforts. Plikaitis says he fed the tool the copy on the company's website and asked it to create messaging that would put the company higher on Google searches. He said visits to the website increased 20 percent.

An outside marketing consultant helped with the project, though Plikaitis says that eventually ChatGPT will eliminate the need for that service. "It's a brave new world," he says.

Smarter Employees

Morgan Stanley is creating a proprietary product that it hopes will make its brokers more effective and efficient. The investment bank and financial services firm has spent the last nine months working with Open AI to create a system that will allow brokers and their assistants access to the vast trove of Morgan Stanley documents, such as research reports, in a way that will make the information easy to retrieve and synthesize. The goal is to help them better service their clients. No external information will be included.

Jeff McMillan, head of data innovation and analytics for Morgan Stanley Wealth Management in New York City, says that part of the criticism of ChatGPT is that it draws from so many sources. Morgan Stanley's system eliminates potential problems by only tapping its own resources.

By taking this approach, "We believe we are going to produce high-quality, consistent answers," McMillan says.

He says the product can be especially useful for brokers when they're asked about situations they may not normally face. For example, a client may call and say they just inherited a valuable painting and don't know what to do with it. Or a client may need to put financial structures in place to deal with a severely disabled family member.

McMillan says that by making information easier to find, brokers will have more time to service their clients and find new ones. "What we are going to do," he says, "is supercharge our financial advisors to make them better."

![]()

|

Organizations using ChatGPT can face numerous legal risks, such as copyright infringement, privacy violations and plagiarism. As the technology becomes more widespread, it may be time for companies to establish a generative AI policy. But before devising any new protocols, it pays to think about how the company will be using the technology, according to Avi Gesser, the New York City-based co-chairman of the Data Strategy and Security Group at law firm Debevoise & Plimpton. Organizations, he adds, are still learning what the technology can do. "Do a survey to find out what people are using it for. What do they want to use it for?" Gesser says. "You need to know what you're going to put in the policy." Gesser recommends adopting the technology slowly, to mitigate as many risks as possible, and taking time to balance upside potential with liabilities. He notes that using the technology may create new legal issues. For example, is it OK for a content creator to charge a client for materials that were partially developed with generative AI without disclosing that? Gesser says the client may get upset when paying for a product the client thinks it could have developed itself with ChatGPT. The content creator's reputation may have declined in the client's eyes, and there could be a legal risk, too. Here are some steps Gesser recommends for formulating a policy on generative AI:

|

Theresa Agovino is the workplace editor for SHRM.

Explore Further

SHRM provides resources and information to help business leaders with information and resources to manage the potential and the risks of using generative AI.

300 Million Jobs On AI Chopping Block?

Goldman Sachs economists predict that 300 million jobs globally could be eliminated through the use of ChatGPT and similar forms of generative artificial intelligence. But those economists note that jobs lost through automation historically have been offset by the creation of new jobs.

ChatGPT and HR: A Primer for HR Professionals

Here's an in-depth look at how ChatGPT can expedite repetitive tasks—and what risks you may run if you rely on it too heavily.

HR Q&A: What Is AI and How Is It Used in the Workplace?

AI refers to computers or computer-controlled machines that can simulate human intelligence in various ways. These machines can range from a laptop or cellphone to computer-controlled robotics.

ChatGPT's Use and Misuse in the Workplace

There are numerous implications surrounding ChatGPT and issues such as data protection; infringement and copyright; confidentiality; accuracy; and bias perspective.

How ChatGPT Could Discriminate Against Candidates

The Biden administration is considering putting restrictions on AI tools such as ChatGPT, amid growing concerns that the technology could discriminate against job applicants and spread misinformation.

How to Manage Generative AI and ChatGPT in the Workplace

It's the job of HR to be ready for anything, but the newness of generative AI combined with the dizzying hype and potential legal ramifications can make it feel particularly intimidating to address.

Using ChatGPT Correctly on the Job

ChatGPT is the latest tool that workers are using to get their jobs done more efficiently, but they and their managers need to be aware of possible dangers, including plagiarism, incorrect information and even security risks.

SHRM Resource Hub Page: Future of Work

Employers need to prepare their workforces for the future. While much of the discussion involves technology, other factors, such as remote work and the gig economy, will also play a large role in how work is done.

Ew Window New Window New Window New Window